Metaverse

ChatGPT leaks personal info: Woman asks AI about her plant, gets SHOCKED with response, ‘Scariest thing seen’ – Crypto News

ChatGPT, the generative artificial technology which recently made waves online for Ghibli style images is in the news again, but this time for erroneous output. A shocking response was generated when a user asked ChatGPT what is wrong with her plant. The frightening response the user received was someone else’s personal data.

Suggesting it to be the “scariest thing,” she saw AI do, in a LinkedIn post she stated, “I uploaded a few pics of my Sundari (peacock plant) on ChatGPT—just wanted help figuring out why her leaves were turning yellow.” Revealing that instead of giving plant care advice, ChatGPT provided her with someone else’s personal data. The response genearated, “Mr. Chirag’s full CV. His CA student registration number. His principal’s name and ICAI membership number. And confidently called it Strategic Management notes.”

Attached here are the screenshots of the conversation, the user claimed to have had with the chatbot:

Narrating the harrowing experience, Chartered Accountant Pruthvi Mehta in her post added, “I just wanted to save a dying leaf. Not accidentally download someone’s entire career. It was funny for like 3 seconds—until I realised this is someone’s personal data.”

Questing the overuse of AI technology, this post is doing the rounds on social media and has amassed over 900 reactions and several comments. Claiming it to be a counter reaction of ChatGpt, due to overuse for Ghibli Art, she posed the question, “Can we still keep Faith on AI.”

Check netizen reaction here

Strong reaction from internet users poured in as a user remarked, “I am sure the data is made up and incorrect! Pruthvi.” Another user commented, “This is surprising, since the prompt asked something entirely different.”

A third user wrote, “Wondering if these are real details of someone, or it’s just fabricated it. Nevertheless, seems a bit concerning, but looks more like a bug in their algorithms.” A fourth user replied, “I don’t see this is possible, unless the whole chat thread must have something in the link with this.”

-

Technology3 days ago

Technology3 days agoChatGPT users are mass cancelling OpenAI subscriptions after GPT-5 launch: Here’s why – Crypto News

-

Technology1 week ago

Binance to List Fireverse (FIR)- What You Need to Know Before August 6 – Crypto News

-

Blockchain1 week ago

Blockchain1 week agoAltcoin Rally To Commence When These 2 Signals Activate – Details – Crypto News

-

Technology1 week ago

Technology1 week agoBest computer set under ₹20000 for daily work and study needs: Top 6 affordable picks students and beginners – Crypto News

-

Cryptocurrency1 week ago

Cardano’s NIGHT Airdrop to Hit 2.2M XRP Wallets — Find Out How Much You Can Get – Crypto News

-

Technology1 week ago

Beyond Billboards: Why Crypto’s Future Depends on Smarter Sports Sponsorships – Crypto News

-

Cryptocurrency1 week ago

Cryptocurrency1 week agoStablecoins Are Finally Legal—Now Comes the Hard Part – Crypto News

-

Cryptocurrency1 week ago

Cryptocurrency1 week agoTron Eyes 40% Surge as Whales Pile In – Crypto News

-

Technology1 week ago

Technology1 week agoGoogle DeepMind CEO Demis Hassabis explains why AI could replace doctors but not nurses – Crypto News

-

Business1 week ago

Analyst Spots Death Cross on XRP Price as Exchange Inflows Surge – Is A Crash Ahead ? – Crypto News

-

De-fi1 week ago

De-fi1 week agoTON Sinks 7.6% Despite Verb’s $558M Bid to Build First Public Toncoin Treasury Firm – Crypto News

-

Cryptocurrency1 week ago

Cryptocurrency1 week agoEthereum Hits Major 2025 Year Peak Despite Price Dropping to $3,500 – Crypto News

-

Blockchain1 week ago

Blockchain1 week agoXRP Must Hold $2.65 Support Or Risk Major Breakdown – Analyst – Crypto News

-

Blockchain1 week ago

Blockchain1 week agoXRP Must Hold $2.65 Support Or Risk Major Breakdown – Analyst – Crypto News

-

others1 week ago

Japan CFTC JPY NC Net Positions down to ¥89.2K from previous ¥106.6K – Crypto News

-

Technology1 week ago

Technology1 week agoOppo K13 Turbo, K13 Turbo Pro to launch in India on 11 August: Expected price, specs and more – Crypto News

-

Blockchain7 days ago

Shiba Inu Team Member Reveals ‘Primary Challenge’ And ‘Top Priority’ Amid Market Uncertainty – Crypto News

-

others7 days ago

others7 days agoBank of America CEO Denies Alleged Debanking Trend, Says Regulators Need To Provide More Clarity To Avoid ‘Second-Guessing’ – Crypto News

-

Technology7 days ago

Technology7 days agoOpenAI releases new reasoning-focused open-weight AI models optimised for laptops – Crypto News

-

Blockchain6 days ago

Blockchain6 days agoCrypto Market Might Be Undervalued Amid SEC’s New Stance – Crypto News

-

De-fi5 days ago

De-fi5 days agoCoinbase Pushes for ZK-enabled AML Overhaul Just Months After Data Breach – Crypto News

-

Technology1 week ago

Will The First Spot XRP ETF Launch This Month? SEC Provides Update On Grayscale’s Fund – Crypto News

-

Technology1 week ago

Technology1 week agoAmazon Great Freedom Sale deals on smartwatches: Up to 70% off on Samsung, Apple and more – Crypto News

-

others1 week ago

SharpLink Buys the Dip, Acquires $100M in ETH for Ethereum Treasury – Crypto News

-

De-fi1 week ago

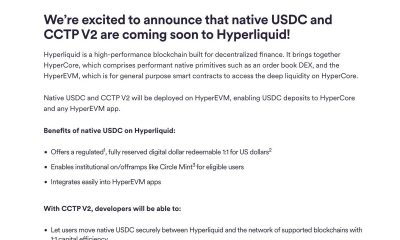

De-fi1 week agoCircle Extends Native USDC to Sei and Hyperliquid in Cross-Chain Push – Crypto News

-

Business1 week ago

Is Quantum Computing A Threat for Bitcoin- Elon Musk Asks Grok – Crypto News

-

Technology1 week ago

Technology1 week agoElon Musk reveals why AI won’t replace consultants anytime soon—and it’s not what you think – Crypto News

-

Cryptocurrency1 week ago

Cryptocurrency1 week agoHow to Trade Meme Coins in 2025 – Crypto News

-

Cryptocurrency1 week ago

Cryptocurrency1 week agoLido Slashes 15% of Staff, Cites Operational Cost Concerns – Crypto News

-

others1 week ago

others1 week agoIs Friday’s sell-off the beginning of a downtrend? – Crypto News

-

others1 week ago

Pi Network Invests In OpenMiind’s $20M Vision for Humanoid Robots- Is It A Right Move? – Crypto News

-

Business1 week ago

Pi Network Invests In OpenMiind’s $20M Vision for Humanoid Robots- Is It A Right Move? – Crypto News

-

others7 days ago

MetaPlanet Launches Online Clothing Store As Part of ‘Brand Strategy’ – Crypto News

-

Metaverse6 days ago

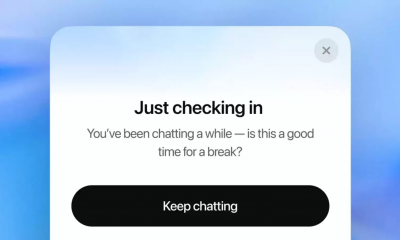

Metaverse6 days agoChatGPT won’t help you break up anymore as OpenAI tweaks rules – Crypto News

-

Technology6 days ago

Technology6 days agoiPhone users alert! Truecaller to discontinue call recording feature for iOS from September 30. Here’s what you can do… – Crypto News

-

Technology6 days ago

Technology6 days agoiPhone users alert! Truecaller to discontinue call recording feature for iOS from September 30. Here’s what you can do… – Crypto News

-

others6 days ago

others6 days agoUS President Trump issues executive order imposing additional 25% tariff on India – Crypto News

-

Business6 days ago

Analyst Predicts $4K Ethereum Rally as SEC Clarifies Liquid Staking Rules – Crypto News

-

Business5 days ago

XRP Price Prediction As $214B SBI Holdings Files for XRP ETF- Analyst Sees Rally to $4 Ahead – Crypto News

-

others5 days ago

others5 days agoEUR firmer but off overnight highs – Scotiabank – Crypto News

-

Blockchain5 days ago

Blockchain5 days agoTrump to Sign an EO Over Ideological Debanking: Report – Crypto News

-

De-fi5 days ago

De-fi5 days agoRipple Expands Its Stablecoin Payments Infra with $200M Rail Acquisition – Crypto News

-

others4 days ago

others4 days agoRipple To Gobble Up Payments Platform Rail for $200,000,000 To Support Transactions via XRP and RLUSD Stablecoin – Crypto News

-

Technology3 days ago

Technology3 days agoHumanoid Robots Still Lack AI Technology, Unitree CEO Says – Crypto News

-

Cryptocurrency3 days ago

DWP Management Secures $200M in XRP Post SEC-Win – Crypto News

-

Business1 week ago

Is Powell Next As Fed Governor Adriana Kugler Resigns? – Crypto News

-

Cryptocurrency1 week ago

Cryptocurrency1 week agoTron Eyes 40% Surge as Whales Pile In – Crypto News

-

Technology1 week ago

Technology1 week agoAmazon Great Freedom Festival Sale 2025 vs Flipkart Freedom Sale: Comparing MacBook deals – Crypto News

-

Business1 week ago

India’s Jetking Targets 21,000 Bitcoin By 2032 As CFO Foresees $1M+ Price – Crypto News

-

De-fi1 week ago

De-fi1 week agoCrypto Markets Stall as Trump’s Crypto Policy Report Fails to Spark Momentum – Crypto News